By Dr. TUNG Chen-Yuan, Taiwan’s Representative to Singapore

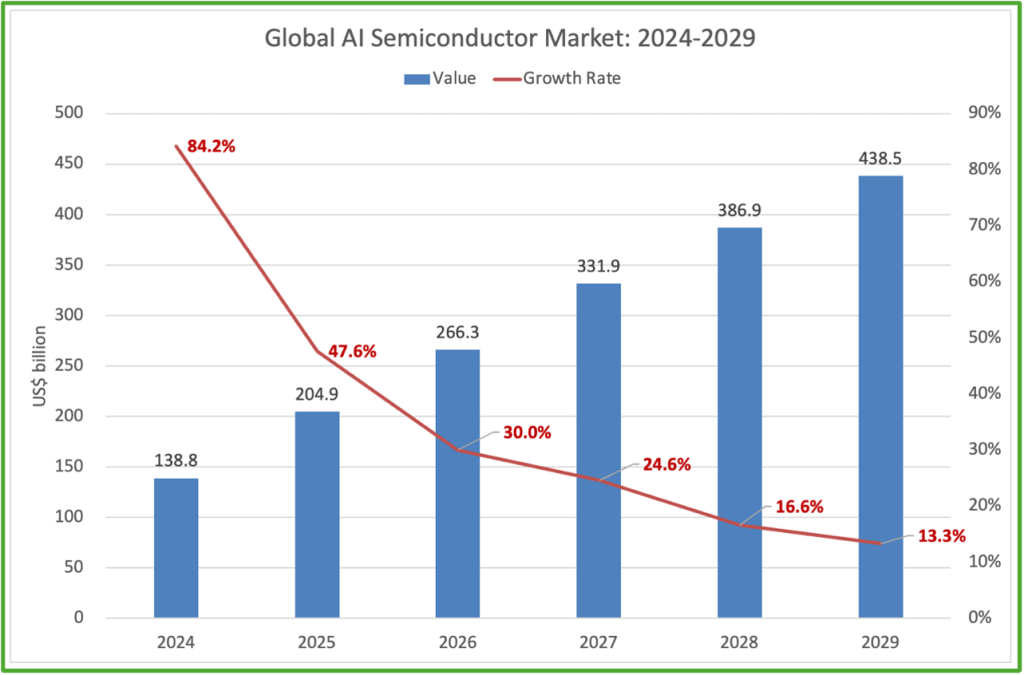

Over the past two years, artificial intelligence (AI) has risen to become the “gravitational center” of the global technology industry. The driving force behind this wave, however, is not merely the evolution of algorithms but a profound transformation in underlying semiconductor technologies. According to data from the Industrial Technology Research Institute (ITRI), the global AI semiconductor market reached US$138.8 billion in 2024, marking AI chips’ transition from a niche application to the primary growth engine of the semiconductor industry.

From Cloud to Edge: A High-Speed Yet Sustainable Growth Trajectory

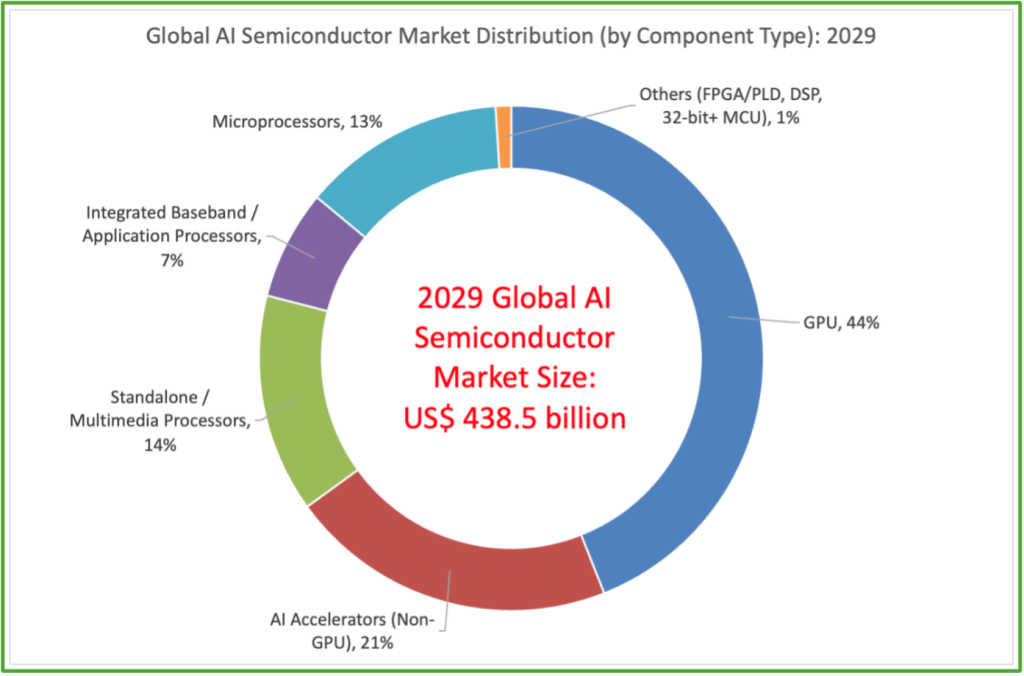

As AI applications expand from cloud data centers toward edge computing environments, the market is entering a phase of rapid yet sustainable growth. The AI semiconductor market is projected to reach US$204.9 billion in 2025, representing a year-on-year growth rate of 47.6%. Looking further ahead, the market is expected to grow to US$438.5 billion by 2029, maintaining a robust compound annual growth rate of 25.9%.

This momentum is also driving explosive demand for critical supporting components, most notably high-bandwidth memory (HBM). As AI model parameters grow at a geometric pace, HBM has become a strategic resource. Market estimates suggest that HBM demand could increase by more than eightfold over the next decade.

GPUs Remain Dominant, but Decentralization Is Underway

From a market structure perspective, the AI semiconductor industry in 2024 remains highly concentrated. Graphics processing units (GPUs), indispensable for large-scale model training and high-performance computing, command a 51% market share, firmly maintaining their leadership position. Led by NVIDIA, dominant vendors have long held over 90% market share in the discrete GPU market, making them the clear winners of the AI boom.

That said, the market structure is gradually shifting. By 2029, GPUs are still expected to remain the largest single category, but their market share is projected to decline to 44%. This does not signal weakening demand; rather, it reflects the growing need for high-efficiency, workload-specific architectures, as the industry moves from GPU-centric dominance toward a more diversified computing landscape.

The Rise of In-House Chips: Cloud Providers Enter the Efficiency Race

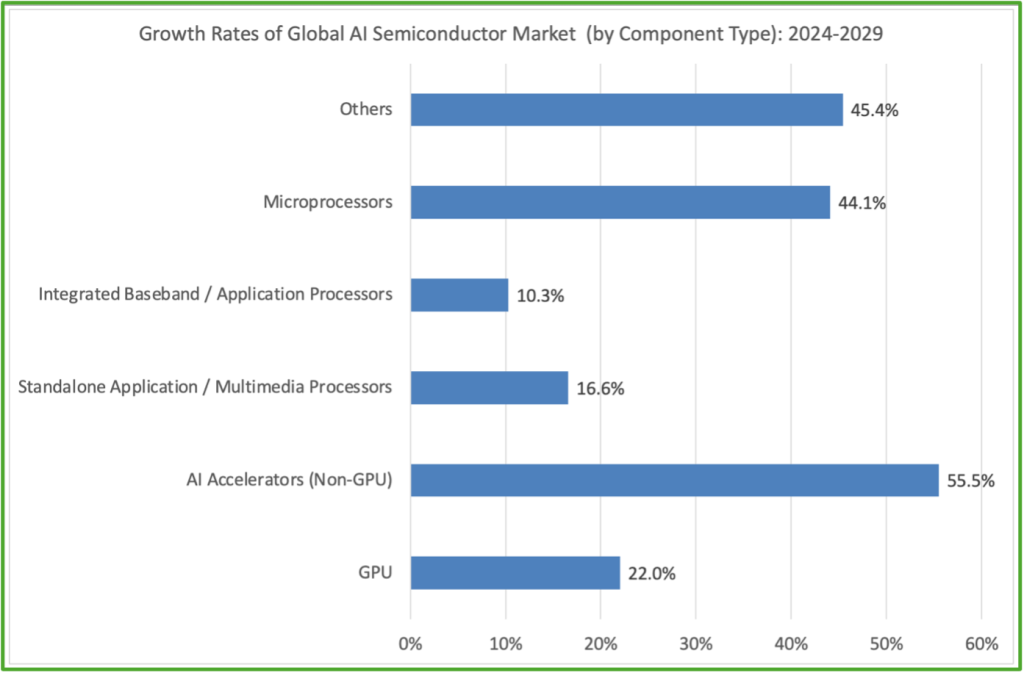

The most transformative force reshaping the industry lies in non-GPU AI accelerators. This segment is projected to grow from 7% market share in 2024 to 21% by 2029, with a striking CAGR of 55.5%, the fastest among all chip categories.

This trend highlights a strategic shift among hyperscale cloud service providers. To optimize energy efficiency and gain greater control over costs, companies such as Google and AWS are accelerating investments in in-house chip development. In 2025, Google’s Tensor Processing Units (TPUs) are projected to account for 40.2% of the AI-specific chip market, while AWS’s Trainium—a chip designed specifically for AI model training—follows closely behind. The AI chip race is no longer a simple contest of raw computing power, but an efficiency-driven competition focused on deep optimization for specific workloads.

The Proliferation of Edge AI: Computing Everywhere

Compared with the rapid transformation in cloud computing, the AIization of endpoint and edge devices is progressing in a steadier yet more pervasive manner. While the market share of smartphones and consumer electronics gradually dilutes as the overall market expands, their absolute market value continues to grow.

Particularly noteworthy is the upgrading role of microprocessors (MPUs). Their market share is expected to double from 7% to 13%, supported by a 44.1% CAGR. This trend signals AI’s accelerating penetration into industrial automation, automotive electronics, and embedded systems—bringing the vision of “AI everywhere” closer to reality.

Looking Ahead: A Precisely Segmented AI Semiconductor Ecosystem

Looking forward, the AI semiconductor industry is entering a new phase characterized by diversification and clear functional specialization. GPUs will continue to anchor large-scale AI training, in-house accelerators will drive efficiency gains in cloud computing, and edge chips will enable widespread deployment across real-world applications.

Future competition in AI semiconductors will no longer center on individual chips alone. Instead, it will hinge on a comprehensive contest encompassing system architecture, energy efficiency, and advanced manufacturing and packaging supply chains. With control of nearly 70% of global advanced-node manufacturing capacity, TSMC remains a foundational pillar of this rapidly evolving ecosystem.

About the Author:

Dr. Tung Chen-Yuan is currently Taiwan’s Representative to Singapore. He was Minister of the Overseas Community Affairs Council of the Republic of China (Taiwan) from June 2020 till January 2023. He was Taiwan’s ambassador to Thailand from July 2017 until May 2020, senior advisor at the National Security Council from October 2016 until July 2017, and Spokesman of the Executive Yuan from May to September 2016. Before taking office, Dr Tung was a distinguished professor at the Graduate Institute of Development Studies, National Chengchi University (Taiwan). He received his Ph.D. degree in international affairs from the School of Advanced International Studies (SAIS), Johns Hopkins University. From September 2006 to May 2008, he was vice chairman of the Mainland Affairs Council, Executive Yuan. His areas of expertise include international political economy, China’s economic development, and prediction markets.